| Table of Contents |

|---|

Introduction

Setup

to include: http://wiki.bluelightav.org/display/BLUE/LVM+Howto

On Linux Debian and Ubuntu

We will assume that you create the LVM on a RAID partition called md1. Adapt this to your situation.

Also replace pinkflower by a relevant name identifying your volume group.

Create the physical volume

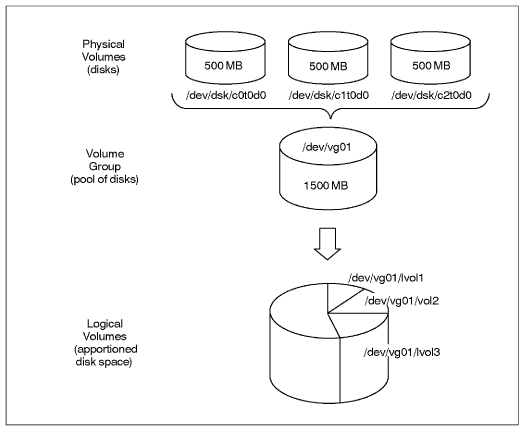

LVM creates virtual block devices out of other block devices. In the simplest case, "other block devices" are hard disk partitions. LVM calls them "physical volumes" or PVs.

LVM groups physical volumes into volume groups (VGs).

From the volume groups' pool of blocks, LVM creates virtual block devices which it calls "logical volumes" or LVs.

Here's a diagram of all that:

In the standard Blue Light setup, the physical volumes are not disk partitions but Multiple devices (md, software RAID) devices.

Setup

In the examples the LVM physical volume (PV) is md1.

Create a physical volume (PV)

Note: this step can be omitted; it is implied by the next step.

| Code Block |

|---|

pvcreate /dev/md1 |

Create

...

a volume group (VG)

The volume group name was normally the first two components of the hostname separated by dot; now we use the FQDN.

| Code Block |

|---|

vgcreate pinkflowerltsp.th /dev/md1 |

Create the logical volumes

...

The Blue Light volume group naming convention is designed to ensure names which are unique amongst computers we support. This allows us to put HDDs containing LVM storage in any computer for recovery purposes.

Create logical volumes (LVs)

For example (10G gives a 10 GB size):

| Code Block |

|---|

lvcreate -L 10G -n root pinkflower |

If you want to create other volume, check the space left on the group with the following command:

| Code Block |

|---|

vgs |

To add another volume redo the lvcreate step.

Create the file system

mkfs.ext4 /dev/pinkflower/root

Check the filesystem

lvs

Resize LVM partition

http://wiki.bluelightav.org/display/BLUE/How+to+resize+LVM+partitions

Rename an LV

...

ltsp.th |

Operations

Show current LVM usage

Overall picture: lsblk (not available on Debian 6)

Show PVs: pvs

Show VGs: vgs

Show LVs: lvs

Activate LVM volume groups (VGs)

This is the command which is run during early boot.

| Code Block |

|---|

vgchange -ay |

Format a logical volume (LV)

This is exactly the same as formatting any other sort of block device; only the device file path is LV-specific, for example /dev/ltsp.th/root

Extend a logical volume (LV)

In case multiple PVs are being used to minimise the effect of storage device failure, keep the LV on a single PV if possible.

LVs are extended by the lvextend command. The --help is, er, helpful.

Single PV

Extending an LV on a single PV may require moving the LV to a PV which has more space or moving other LV(s) off the initial PV.

Find which PVs are hosting each LV:

lvs -o lv_name,devices

Find how much space available on each PV:

pvs

Finally the PV is specified on the lvextend command. Examples:

lvextend --size 400G /dev/bafi.backup/th /dev/sdc1

lvextend --extents +55172 /dev/bafi.backup/blue /dev/sdb3

Reduce a logical volume (LV)

Use the lvreduce command.

Move storage or logical volumes (LVs) between physical volumes (PVs)

Move storage

This is useful when changing physical volumes (PVs). After adding the new HDDs, usually as a RAID 1 md array, to the volume group, the LVs can be moved from the old PV to the new. Finally the old PV can be removed from the VG and the old HDDs removed, usually after powering down.

For example:

pvmove /dev/md1 /dev/md3

Move a logical volume (LV)

For example:

pvmove --name atlassian.blue.av-disk /dev/md1 /dev/sdb1

Rename a logical volume (LV)

Umount any file system contained by the LV and rename

| Code Block |

|---|

umount <drive><device> lvrename <vgname> <oldlvname> <newlvname> |

done

Activate LVMs

| Code Block |

|---|

vgchange -ay |

...

Remove a Physical Volume (PV) from a Volume Group (VG)

vgreduce VG_name PV_name

For example:

vgreduce ls1 /dev/sdb2

Troubleshooting

Messages

File descriptor * leaked on * invocation. Parent PID *

Can be safely ignored. Can be suppressed by setting LVM_SUPPRESS_FD_WARNINGS environment variable before doing whatever produced the message.

Code to generate the message was added when it was alleged that LVM was leaking file descriptors. The message shows that a file descriptor has already been leaked when an LVM program is called.

WARNING: lvmetad is running but disabled. Restart lvmetad before enabling it!

Can be safely ignored.

From https://wiki.gentoo.org/wiki/Talk:LVM#lvmetad_warning: "Normally lvmetad is not even enabled. Neither in initscripts nor in /etc/lvm/lvm.conf. But the lvm service has a useless rc_need on lvmetad. Remove it to get rid of those messages".

Recover a deleted logical volume (LV)

It can happen that you want to restore "accidentally" a deleted partition LV (deleted with lvremove). It is possible

...

The method explained in the link didn't work for me or I missed something. The calculated hexadecimal offset was not corresponding to the start of the needed sections even though the hex was correctly calculated. So I did it manually

Do a less of the partition and move up to section containing the data you are looking for:

...

| Code Block |

|---|

vgchange -ay --ignorelockingfailure |

You are done

Outdated

Resize existing file system without LiveCD

...

A great howto is here http://www.gagme.com/greg/linux/raid-lvm.php

Chrooting and installing GRUB 2 on LVM setup

Boot with a live CD or CrowBar

| No Format |

|---|

mkdir /mnt/root

mount /dev/mapper/volume_group_name-root /mnt/root

mount /dev/mapper/volume_group_name-home /mnt/root/home

mount /dev/mapper/volume_group_name-var /mnt/root/var

mount /dev/sda1 /mnt/root/boot

mount -t proc none /mnt/root/proc

mount --bind /dev /mnt/root/dev

mount --bind /sys /mnt/root/sys

chroot /mnt/root

update-initramfs -u

update-grub

grub-install /dev/sda

|